Abstract

Modern artistic productions increasingly demand automated choreography generation that adapts to diverse musical styles and individual dancer characteristics. Existing approaches often fail to produce high-quality dance videos that harmonize with both musical rhythm and user-defined choreography styles, limiting their applicability in real-world creative contexts. To address this gap, we introduce ChoreoMuse, a diffusion-based framework that uses SMPL format parameters and their variation version as intermediaries between music and video generation, thereby overcoming the usual constraints imposed by video resolution. Critically, ChoreoMuse supports style-controllable, high-fidelity dance video generation across diverse musical genres and individual dancer characteristics, including the flexibility to handle any reference individual at any resolution. Our method employs a novel music encoder MotionTune to capture motion cues from audio, ensuring that the generated choreography closely follows the beat and expressive qualities of the input music. To quantitatively evaluate how well the generated dances match both musical and choreographic styles, we introduce two new metrics that measure alignment with the intended stylistic cues. Extensive experiments confirm that ChoreoMuse achieves state-of-the-art performance across multiple dimensions, including video quality, beat alignment, dance diversity, and style adherence, demonstrating its potential as a robust solution for a wide range of creative applications.

Method

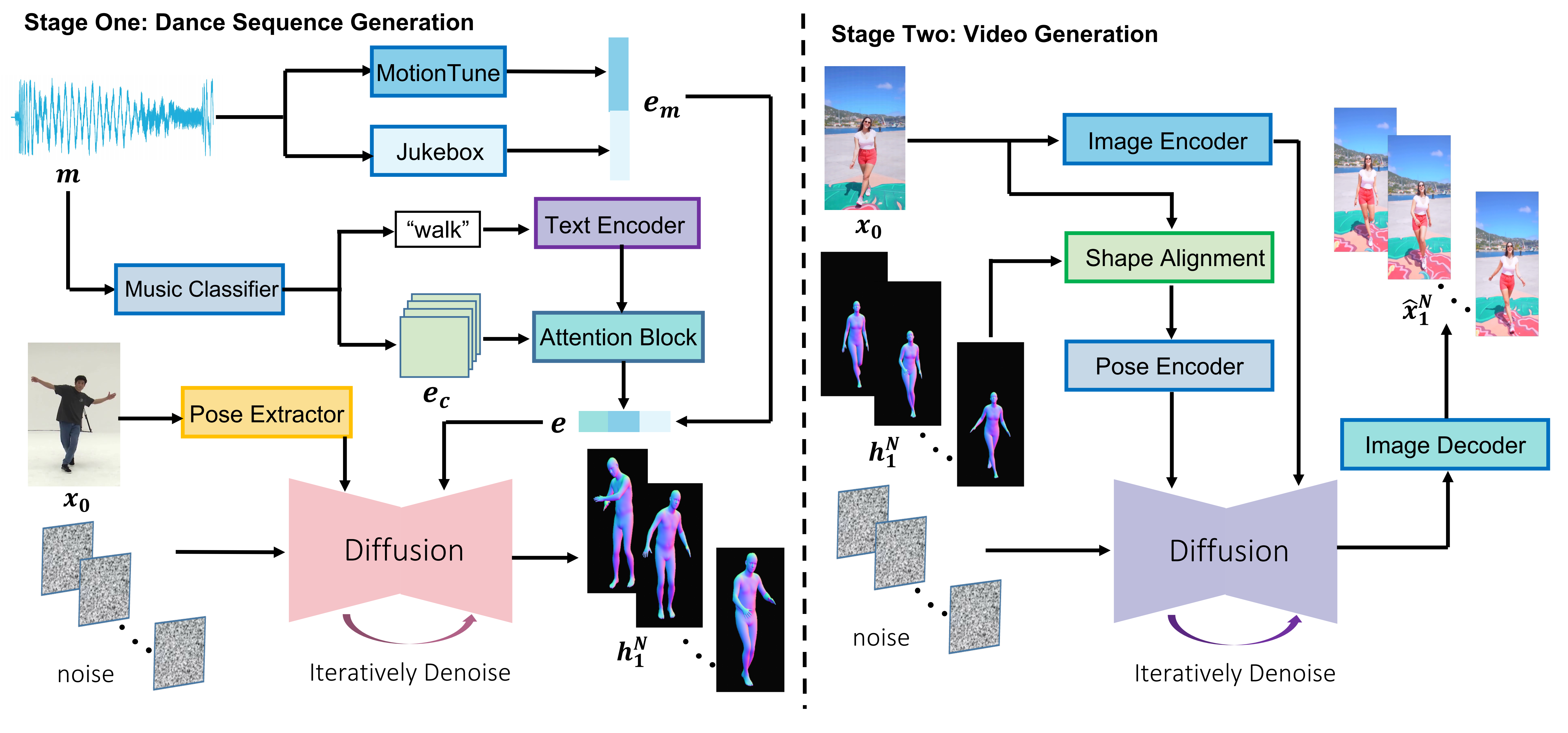

The training framework of ChoreoMuse. In Stage one, the dance sequence generator is trained using a reference image \(x_0\) and a music clip \(m\). Music embeddings \(\boldsymbol{e}_m\) are extracted via MotionTune and Jukebox, while a classifier predicts the music type caption to determine the choreography style. The classifier’s feature vector \(\boldsymbol{e}_c\) is combined with a text-encoded choreography style using attention blocks to generate a final style embedding, which is then merged with \(\boldsymbol{e}_m\) as input to the diffusion model. Meanwhile, a pose extractor processes \(x_0\) to create an initial pose mask for constrained training. In Stage two, the video generator is trained by first aligning \(x_0\) with the generated dance sequence \( h_1^N \). The aligned sequence is then encoded by a pose encoder and used as a condition for the diffusion model, along with an encoded reference image. Finally, the diffusion model synthesizes the complete dance video \( \hat{x}_1^N \).